Hack The Box Academy: AI Red Teamer

Course Review

Overall Course Review

The AI Red Teamer Job Role Path is Hack The Box Academy's comprehensive training program for cybersecurity professionals looking to specialize in offensive security testing against AI systems. Developed in collaboration with Google and aligned with Google's Secure AI Framework (SAIF), this path bridges the gap between traditional red teaming and the emerging discipline of AI security.

The course spans 12 modules covering the full spectrum of AI attack surfaces: from foundational AI/ML concepts through prompt injection, model privacy attacks, adversarial evasion techniques, supply chain risks, and deployment-level vulnerabilities. It combines theoretical grounding with hands-on exercises, giving learners practical experience manipulating model behaviors and developing AI-specific red teaming strategies.

This path fundamentally changed how I view machine learning systems. The early modules build intuition around how AI works, while the later modules, particularly the evasion trilogy and data attacks, dive deep into the mathematics of adversarial machine learning. Expect to hit walls with the math. I found ChatGPT invaluable for decoding unfamiliar mathematical notation and building the intuition needed to really understand these attacks. The struggle is worth it, by the end you'll understand not just how to break AI systems, but why the attacks work at a fundamental level and how to defend against them.

What sets this path apart is its dual focus on offense and defense. Throughout the modules, attack techniques are paired with mitigations, and the final modules on AI Privacy and AI Defense ensure you can secure the systems you've learned to compromise. The integration of Google's SAIF and OWASP Top 10 frameworks early on provides mental scaffolding that pays dividends as you progress through increasingly technical content.

The path drives home that AI systems are vulnerable at every layer, model, data, application, and system, and that traditional web vulnerabilities like XSS, SQLi, and command injection compound with AI-specific risks in ways that are often overlooked. You'll develop mathematical intuition around norms, gradients, and optimization that's essential for understanding adversarial ML at a fundamental level. While jailbreaks and prompt injection are the entry point that most people think of, data poisoning and evasion attacks are where the deep exploitation happens. You'll also learn that defensive measures like adversarial training, DP-SGD, and guardrails come with real tradeoffs against model utility, there's no free lunch in AI security.

.png)

If you're serious about AI security, this course is the real deal.

***

Module Reviews

Module 1: Fundamentals of AI

.png)

Created by PandaSt0rm, this foundational module clocks in at around 8 hours across 24 sections of pure theory, no hands-on exercises, no labs, just a deep dive into the concepts that underpin AI systems. It serves as both an approachable introduction and a reference you'll come back to throughout the path. The content spans five major domains:

The module touches on mathematical foundations but doesn't make them the focus. That said, having some background in statistics, linear algebra, and calculus will make the content click faster. If you're coming in cold on the math, expect to do some supplementary reading.

Rated Medium difficulty at Tier 0, I'd say that's spot on. No multivariable calculus or actual coding here, but the topics are certainly more advanced than your typical intro material. Overall an extremely solid survey of AI and machine learning and a wonderful place to start the learning journey.

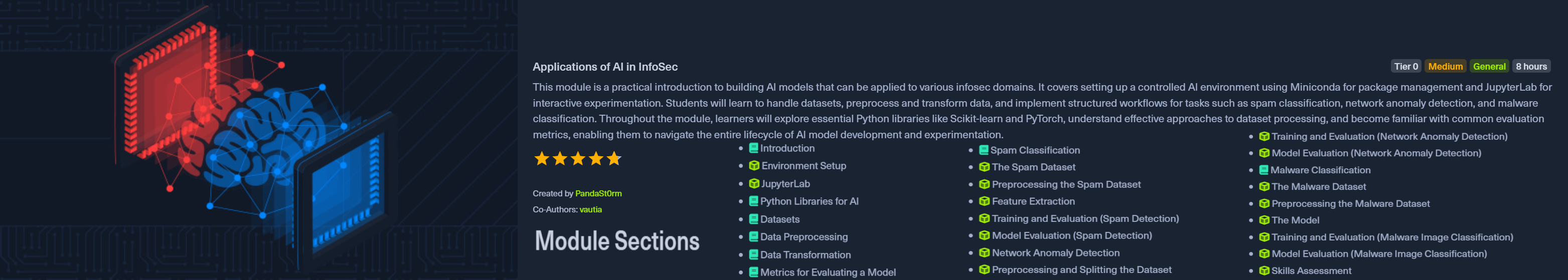

Module 2: Applications of AI in InfoSec

Created by PandaSt0rm with vautia, this 25-section module spans roughly 8 hours and is where theory meets practice. You'll build a complete AI development environment from scratch using Miniconda for package management and JupyterLab for interactive experimentation, then work through the entire model development lifecycle from raw data to trained classifiers.

The module covers:

The module recommends setting up your own environment rather than using the provided VM for better training performance. A machine with at least 4GB RAM and a reasonably modern multi-core CPU will serve you well here. GPU is optional but helpful.

Rated Medium and costed at Tier 0, I'd say that's spot on. The module guides you through each step in detail without leaving you stranded. What I thoroughly enjoyed was how applicable everything felt, by the end I had working proof-of-concept models for real-world cybersecurity use cases: spam detection, network anomaly detection, and malware classification. That's not just theory, that's something you can actually build on.

Module 3: Introduction to Red Teaming AI

.png)

Created by vautia, this 11-section module runs about 4 hours and shifts gears from building AI systems to breaking them. It's the bridge between the foundational modules and the attack-focused content ahead, giving you the threat landscape overview before diving into specific exploitation techniques.

The module covers three core frameworks:

From there, it breaks down attack surfaces by component:

Prerequisites are the previous two modules (Fundamentals of AI and Applications of AI in InfoSec), which makes sense - you need to understand how these systems work before you can break them.

Rated Medium and costed at Tier I, this is an excellent introduction to secure AI and AI red teaming. What I particularly enjoyed was the early introduction of the Google SAIF and OWASP Top 10 frameworks, having these in the back of your mind as you dive deeper into the technical topics gives you a mental scaffold to hang everything on.

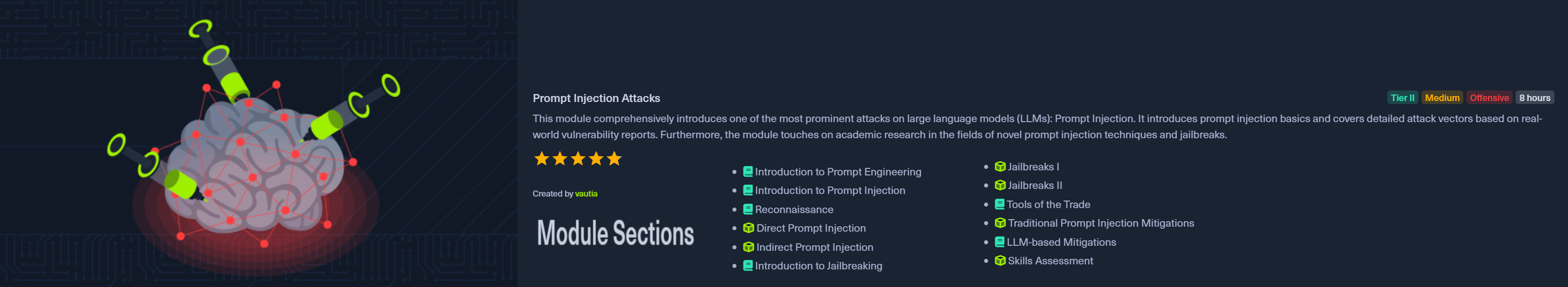

Module 4: Prompt Injection Attacks

Created by vautia, this 12-section module runs about 8 hours and marks your entry into Tier II offensive content. It's a comprehensive deep dive into one of the most prominent attack vectors against large language models: prompt injection.

The module covers four main areas:

You'll start with prompt engineering fundamentals, move through reconnaissance techniques, then work through increasingly sophisticated injection and jailbreak methods. The "Tools of the Trade" section introduces automation and tooling for prompt injection testing.

Prerequisites build on everything prior: Fundamentals of AI, Applications of AI in InfoSec, and Introduction to Red Teaming AI.

Rated Medium and costed at Tier II, I thoroughly enjoyed this module as it introduces the kind of thinking required to perform adversarial AI testing. The different jailbreaking techniques provide a lot of real-world value, and honestly, when people think of AI pentesting, jailbreaks are what they tend to think of first. This module delivers on expectations.

Module 5: LLM Output Attacks

Created by vautia, this 14-section module runs about 8 hours and flips the script from input manipulation to output exploitation. Where prompt injection focuses on what goes into an LLM, this module examines what comes out and how that output can compromise systems.

The module covers traditional web vulnerabilities in an LLM context:

It also covers abuse attacks, the weaponization side of LLMs: hate speech campaigns, misinformation generation, and the detection and mitigation of these attacks. The module wraps up with safeguard case studies and legislative regulation, grounding the technical content in real-world policy.

Prerequisites are notably heavier here, requiring both the AI path modules (Fundamentals, Applications, Intro to Red Teaming, Prompt Injection) plus traditional web security knowledge (XSS, SQL Injection Fundamentals, Command Injections).

Rated Medium and costed at Tier II, this module is highly relevant to real-world work. It introduces the layer of traditional security vulnerabilities and demonstrates how they compound with AI technologies. While not overly technically deep, it continues introducing learners to the attack surface of AI systems and how it's exploited. The combination of classic web vulns with LLM contexts is exactly what you'll encounter in production AI applications. As with other modules in the path, it pairs offensive techniques with their corresponding defenses, reinforcing that red teaming is ultimately about improving security posture, not just finding holes.

Module 6: AI Data Attacks

Created by PandaSt0rm, this 25-section module is estimated at 3 days and marks the first Hard-rated content in the path. Where previous modules focused on prompt-level and output-level attacks, this one goes deeper into the data pipeline itself, targeting the foundation that AI systems are built on.

The module covers several sophisticated attack categories:

The hands-on content is substantial, walking through baseline model creation, attack implementation, and evaluation for each technique. You'll work with logistic regression, CNN architectures, and custom attack tooling.

Prerequisites include the core AI path modules plus strong Python skills and Jupyter Notebook familiarity. HTB highly recommends using your own machine for the practicals rather than the provided VM, as the training workloads benefit from local compute.

Rated Hard and costed at Tier II, I ran into the math like it was a brick wall. Luckily I was able to use ChatGPT to decode the mathematical symbols I didn't know and began developing the mathematical intuition required to really understand and exploit AI systems. The Hard rating is truly justified, this will be the first real roadblock in the course pathway for many learners. If you've been coasting on the Medium modules, expect to slow down here.

Module 7: Attacking AI - Application and System

.png)

Created by vautia, this 14-section module runs about 8 hours and zooms out from model and data attacks to examine the application and system layers of AI deployments. After the math-heavy data attacks module, this returns to Medium difficulty while covering equally critical attack surfaces.

The module covers application and system component vulnerabilities:

A significant portion focuses on the Model Context Protocol (MCP), the AI orchestration protocol introduced in 2024. You'll get a practical introduction to how MCP works, then dive into attacking vulnerable MCP servers and the risks of malicious MCP servers, with mitigations to round it out.

Prerequisites include the AI path modules plus SQL Injection Fundamentals, Command Injections, and Web Attacks.

Rated Medium and costed at Tier II, this module is an excellent blend of classic attack vectors (command injection, SQLi, info disclosure) with modern AI exploitation via MCP. It clearly shows how legacy flaws and AI risks combine, making this a highly valuable and insightful module. A welcome return to Medium and a little less math after the Hard data attacks module.

Module 8: AI Evasion - Foundations

.png)

Created by PandaSt0rm, this 12-section module runs about 8 hours and kicks off the three-module evasion series. It introduces inference-time evasion attacks, techniques that manipulate inputs to bypass classifiers or force targeted misclassifications at prediction time.

The module establishes the evasion threat model:

You'll build and attack a spam filter using the UCI SMS dataset, implementing GoodWords attacks in both white-box and black-box settings. The black-box content covers operating under query limits, including candidate vocabulary construction, adaptive selection strategies, and small-combination testing to minimize detection.

Prerequisites build on the full path so far including the AI Data Attacks module. Strong Python skills and Jupyter Notebook familiarity are mandatory, and HTB highly recommends using your own machine for the practicals.

Rated Medium and costed at Tier II, this is when the course starts getting really into the weeds, readying you for deeper mathematical attacks and adversarial machine learning in the modules ahead. A great module for building intuition around machine learning and how to attack the models themselves, not just the applications around them.

Module 9: AI Evasion - First-Order Attacks

Created by PandaSt0rm, this 23-section module is estimated at 2 days and returns to Hard difficulty. It takes gradient-based adversarial techniques from theory to implementation, exploiting the differentiable structure of neural networks to craft perturbations that force misclassifications.

The module covers the core first-order attack methods:

Each attack includes full implementation, evaluation metrics, and visualization. The module has two skills assessments, reflecting the depth of content.

Prerequisites include all previous AI modules plus basic understanding of neural networks and gradient computation. Strong Python and Jupyter skills are mandatory, and using your own machine is highly recommended. I also highly recommend using ChatGPT to help you understand the mathematical concepts, it's a powerful tool and will help you greatly.

Rated Hard and costed at Tier II, this was another module where I ran into a brick wall with the mathematics. At first much of the content was hieroglyphics to me, however I was eventually able to understand it and it fundamentally began to change the way I view the world and systems. The mathematical intuition gained here is second to none in regards to how machine learning actually works and how it's exploitable. Worth the struggle.

Module 10: AI Evasion - Sparsity Attacks

Created by PandaSt0rm, this 28-section module is estimated at 3 days and is the most technically dense of the evasion trilogy. Where first-order attacks focus on minimizing perturbation magnitude, sparsity attacks flip the constraint: minimize how many features change, not how much they change.

The module covers the mathematical foundations and implementations:

The hands-on content is extensive, covering environment setup, implementing FISTA components, loss gradients, binary search, attack execution, visualizations, and sparsity analysis for both ElasticNet and JSMA approaches.

Prerequisites include all previous AI modules through First-Order Attacks, plus understanding of neural networks, gradient computation, and optimization methods. Own machine highly recommended.

Rated Hard and costed at Tier II, this one is much like the last, very tough on the mathematics. But the intuition gained is transformative, especially around the different norms (L0, L1, L2, L_inf) and how they interact. It also gave insight into how adversarial algorithms are conceived and developed, not just how to use them. You'll leave understanding why these attacks work, not just that they work.

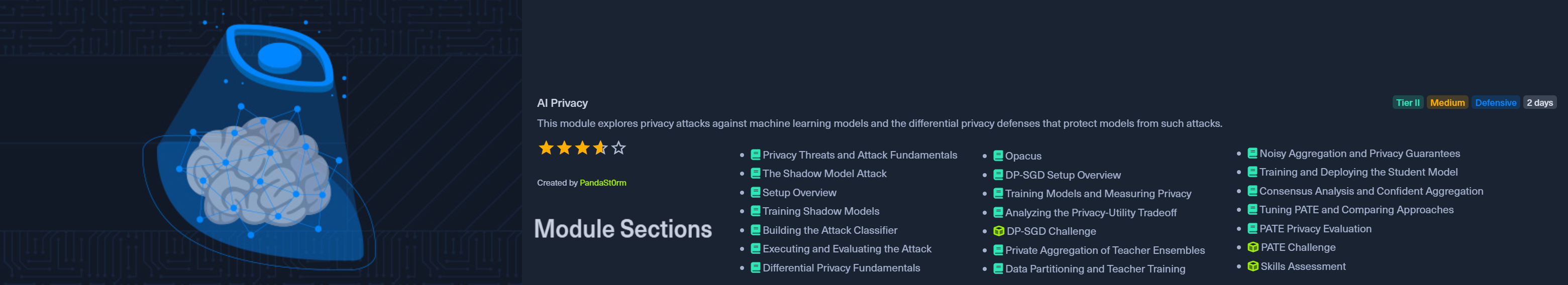

Module 11: AI Privacy

Created by PandaSt0rm, this 21-section module is estimated at 2 days and is notably the first Defensive-focused module in the path. It explores the privacy dimension of AI security, both the attacks that extract sensitive information from trained models and the defenses that protect against them.

The attack side covers Membership Inference Attacks (MIA):

The defense side covers differential privacy approaches:

Prerequisites include all previous AI modules, plus solid PyTorch familiarity and understanding of neural network training and optimization. Own machine is highly recommended over Pwnbox.

Rated Medium and costed at Tier II, I found this module informative in showing how membership inference attacks work and how models can be secured through training methods. Honestly, I thought it could have been rated Hard, the math and algorithms pushed the limits of what I'd consider Medium territory. What I found particularly valuable was seeing the ML training side in more depth, understanding how different training approaches (standard vs DP-SGD vs PATE) produce models with fundamentally different security properties, and the concrete tradeoffs between privacy guarantees and model utility.

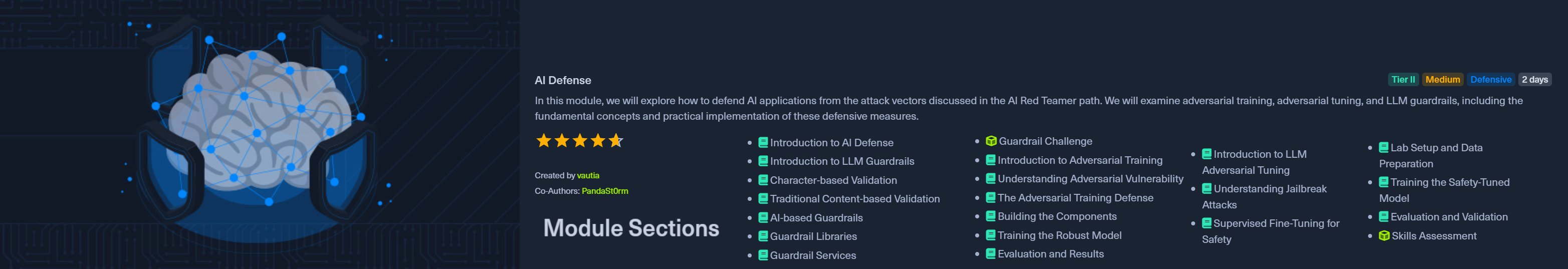

Module 12: AI Defense

Created by vautia with PandaSt0rm, this 21-section capstone module is estimated at 2 days and brings everything full circle. After spending the path learning to attack AI systems, you now learn to defend them, understanding both sides of the adversarial equation.

The module covers three main defensive approaches:

LLM Guardrails (application-layer defenses):

Model-level Defenses:

The module also covers advanced prompt injection tactics like priming attacks and how to evade guardrails, giving you both the offensive and defensive perspective.

Prerequisites include the core AI modules plus the evasion modules. Note that running adversarial training and tuning code requires powerful hardware, but it's optional, you can complete the module without running it yourself.

Rated Medium and costed at Tier II, this was a wonderful capstone to the course. It covers advanced techniques while tying everything together and pushing the path over the line from educational to practically useful. This is the module that enables effective AI red teaming against real systems with safeguards in place. What I particularly appreciated was how it equips you not just to attack AI systems and find vulnerabilities, but to secure them and defend against the very attacks you've learned. That dual perspective is what separates a red teamer from just a hacker.

Final Verdict

The AI Red Teamer path delivers on its promise. At an estimated 19 days of content, it took me about 2 months to complete while balancing other commitments. That time investment was worth every hour. The path takes you from zero AI security knowledge to being capable of performing meaningful offensive assessments against AI systems, while also equipping you to recommend and implement defenses. The collaboration with Google shows in the quality and real-world relevance of the content.

This is not an easy path. The Medium-rated modules are genuinely Medium, and the Hard-rated modules (AI Data Attacks, First-Order Attacks, Sparsity Attacks) will challenge anyone without a strong mathematical background. But that challenge is precisely what makes the learning valuable. You'll emerge with skills that are genuinely rare in the security industry.

Ready to become an AI Red Teamer? [Check out the course on HTB Academy](https://academy.hackthebox.com/path/preview/ai-red-teamer)